Automated model testing & documentation

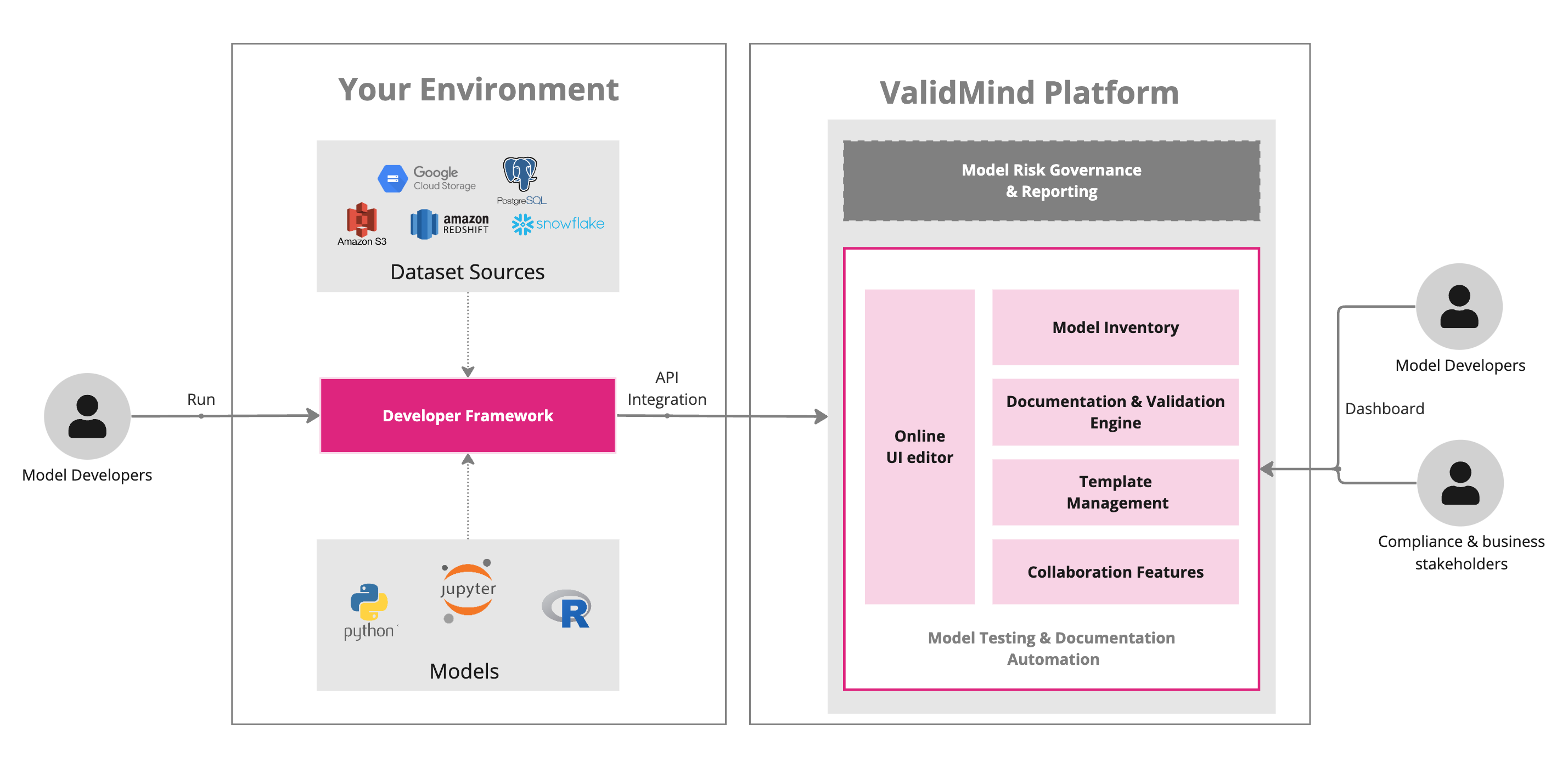

The ValidMind Developer Framework streamlines the process of documenting various types of models. The developer framework automates the documentation process, ensuring that your model documentation and testing aligns with regulatory and compliance standards.

The ValidMind Developer Framework

The ValidMind Developer Framework is a developer framework and documentation engine designed to streamline the process of documenting various types of models, including traditional statistical models, legacy systems, artificial intelligence/machine learning models, and large language models (LLMs). It offers model developers a systematic approach to documenting and testing risk models with repeatability and consistency, ensuring alignment with regulatory and compliance standards.

The ValidMind Developer Framework consists of a client-side library, API integration for models and testing, and validation tests that streamline the model development process. Implemented as a series of independent libraries in Python and R, our developer framework ensures compatibility and flexibility with diverse sets of developer environments and requirements.

With the developer framework, you can:

- Automate documentation — Add comprehensive documentation as metadata while you build models to be shared with model validators, streamlining and speeding up the process.

- Run test suites — Identify potential risks for a diverse range of statistical and AI/LLM/ML models by assessing data quality, model outcomes, robustness, and explainability.

- Integrate with your development environment — Seamlessly incorporate the ValidMind Developer Framework into your existing model development environment, connecting to your existing model code and data sets.

- Upload documentation data — Send qualitative and quantitative test data to the AI risk platform to generate the model documentation for review and approval, fostering effective collaboration with model reviewers and validators.

Simple installation

Install the developer framework with:

%pip install validmindDocs-as-code

What the ValidMind Developer Framework offers:

- Generates documentation artifacts utilizing the context of the model and dataset, the model’s metadata, and the chosen documentation template.

- Can be easily imported into your local model development environment. The supported platforms include Python and R.

- Dual-licensed — The developer framework is available as open-source under AGPL v3 license and also with a commercial software license.

import validmind as vm

vm.init(project="PROJECT_IDENTIFIER")vm_dataset = vm. log_dataset(

df,

"training",

targets=targets,

)

vm. run_dataset_tests(df, vm_dataset=vm_dataset)vm. Log_model (model)

vm. log_training_metrics (model, x_train, y_train)

vm. run_model_tests (model, x_test, y_test)How the ValidMind Developer Framework works:

- The tests and functions are executed automatically, following pre-configured templates tailored for specific model use cases. This ensures that minimum documentation requirements are consistently fulfilled.

- The developer framework integrates with ETL/data processing pipelines using connector interfaces. This enables the extraction of relationships between raw data sources and their corresponding post-processed datasets, such as those preloaded session instances received from platforms like Spark and Snowflake.

Extensible by design

In Financial Services, our platform supports various model types, including:1

- Traditional machine learning models (ML) such as tree-based models and neural network models.

- Natural language processing models (NLP) for text analysis and understanding.

- Large language models (LLMs) in beta testing phase, offering advanced language capabilities.

- Traditional statistical models like Ordinary Least Squares (OLS) regression, Logistic regression, Time Series models, and more.

Our platform is designed to be highly extensible to cater to our customers’ specific requirements. You can expand its functionality in the following ways:

- You can easily add support for new models and data types by defining new classes within the ValidMind Developer Framework. We provide templates to guide you through this process.2

- To include custom tests in the library, you can define new functions. We offer templates to help you create these custom tests.3

- You have the flexibility to integrate third-party test libraries seamlessly. These libraries can be hosted either locally within your infrastructure or remotely, for example, on GitHub. Leverage additional testing capabilities and resources as needed.4

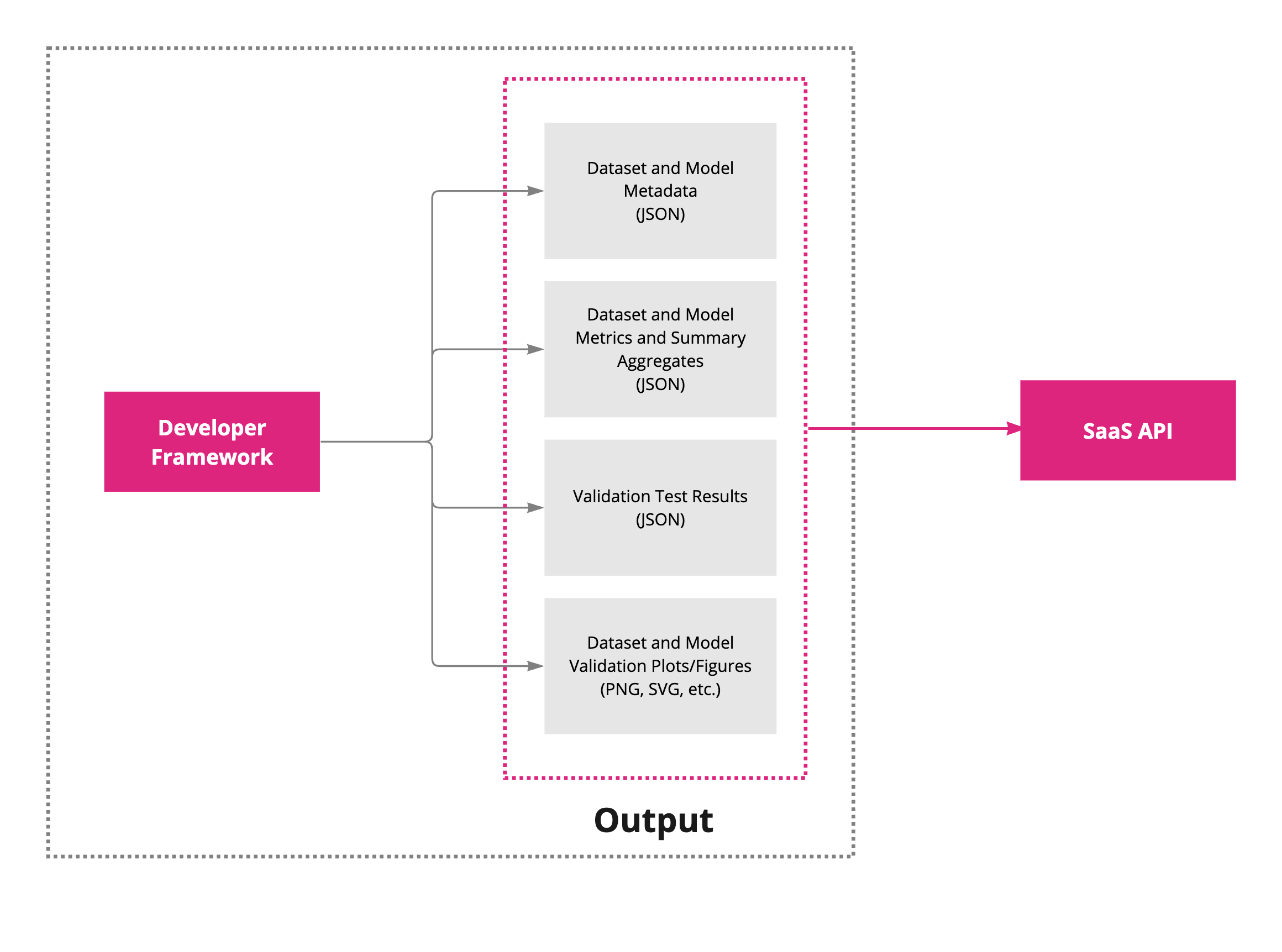

API integration

ValidMind imports the following artifacts into the documentation via SaaS API endpoint integration:

- Metadata about datasets and models, used to lookup programmatic documentation content, such as the stored definition for common logistic regression limitations when a logistic regression model has been passed to the ValidMind test plan to be run.

- Quality and performance metrics collected from datasets and models.

- Output from test and test suites that have been run.

- Images, plots, visuals that were generated as part of extracting metrics and running tests.

- Send any personal identifiable information (PII) when generating documentation reports.

- Store any customer datasets or models.

Ready to try out ValidMind?

Our QuickStart is the quickest and easiest way to try out our product features.