Ongoing monitoring

Monitoring of model performance in model risk management involves regularly assessing a model’s accuracy, stability, and robustness to ensure it remains reliable after deployment.

Monitoring is a critical component of model risk management, as emphasized in regulations such as SR 11-7, SS1/23, and E-24,1 and includes:

- Tracking key performance indicators, detecting model drift, recalibrating as needed, and validating assumptions.

- Backtesting to verify predictive power, maintaining transparent reporting and governance, and ensuring ongoing compliance with regulatory standards.

- Continuous monitoring to sustain the model’s integrity and mitigating risks associated with its use as they arise.

Monitoring scenarios

Scenarios where ongoing monitoring is warranted:

Pre-approval monitoring of new models — New models should undergo a trial phase of monitoring before full approval and subsequent deployment, particularly for high-risk or regulatory models, to ensure reliability before deployment.

Trial phases where a model is subject to ongoing monitoring are typically fairly short, ranging from a few days to several weeks.

Monitoring during significant updates — When a model undergoes a significant update, ongoing monitoring should compare the updated model’s performance to the original. This process, called parallel runs, involves running both models simultaneously for a set period.

Model outputs should be closely monitored to assess whether the update improves performance or introduces new risks. The results help determine if the updated model should replace the original or if further adjustments are needed. Parallel runs are especially important for regulatory or critical models, ensuring changes don’t harm performance.

Post-production monitoring — After deployment into production, models should be regularly tested against performance benchmarks to identify deviations, enabling timely recalibrations or adjustments.

The model’s output should be assessed regularly against predefined performance benchmarks to ensure it meets the required standards. Any deviations from the expected performance should be quickly identified, allowing for timely intervention.

Ongoing monitoring plan

A robust ongoing monitoring plan is crucial for maintaining model accuracy and reliability. Model developers should start regular monitoring from the outset, ideally during the pre-approval phase of model development, refining the plan as new insights are gained. This plan should be included in the initial model documentation, with clear instructions for execution and use of results.2

As monitoring continues, it’s important to report model status to key stakeholders, such as your model risk management committee. Regular updates with summary metrics will keep stakeholder informed of findings and emerging risks, highlighting significant trends or issues that may need action.

Your ongoing monitoring plan should define:

- Metrics and models — Specify which models and performance metrics will be monitored.

- Monitoring frequency — Set how often each metric will be monitored, based on the model’s risk and importance.

- Risk thresholds — Establish thresholds that trigger alerts or actions when metrics deviate from expected ranges.

- Notification system — Implement a system to notify stakeholders when significant issues or deviations occur.

- Regular reports— Present monitoring results using visual tools for clear and accessible decision-making.

Key concepts

- backtesting

- Comparing a model’s predictions against actual outcomes to verify its predictive power and reliability.

- compliance and regulatory adherence

- Ensuring that the model continues to meet evolving regulatory requirements and standards.

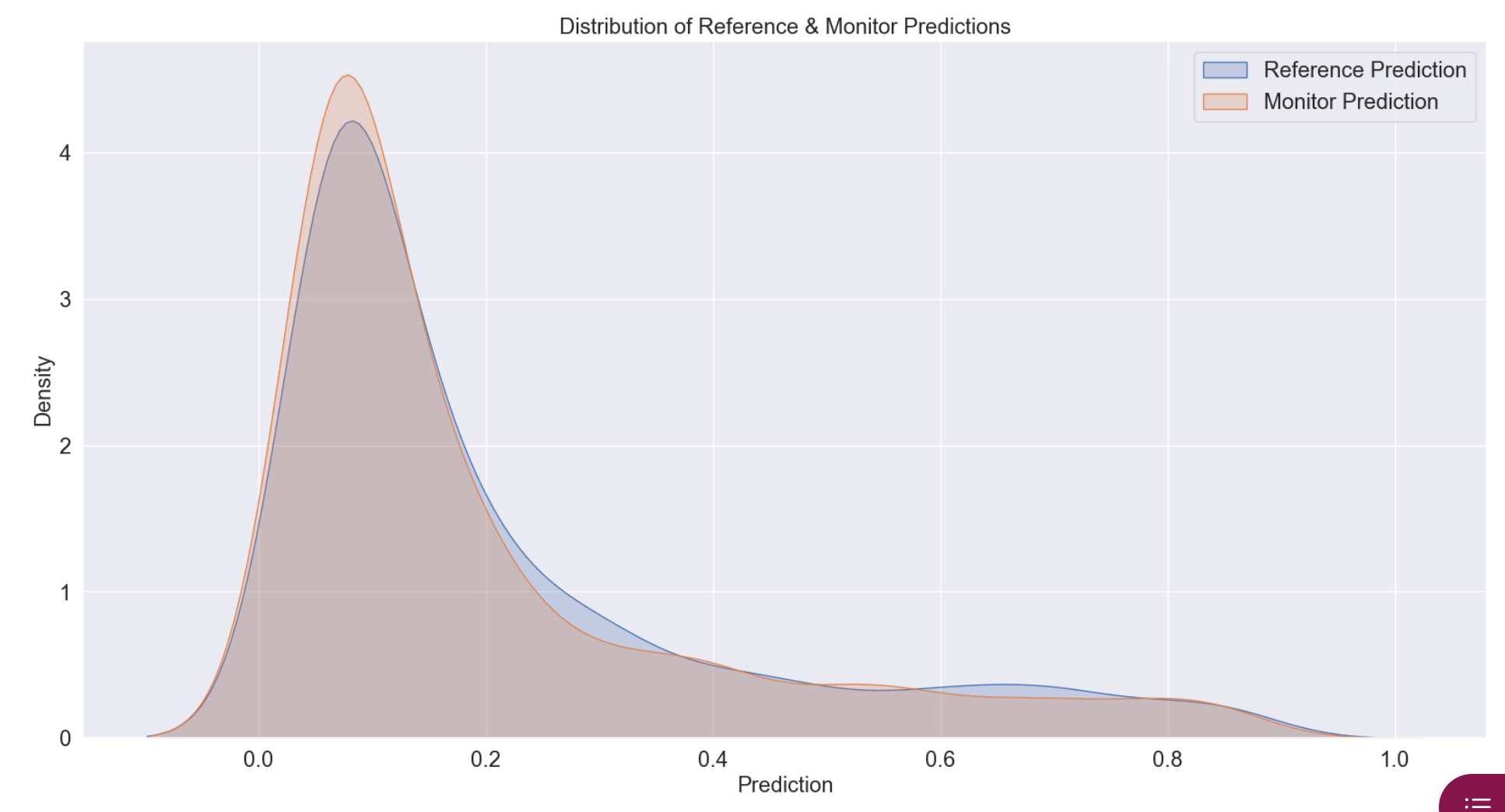

- model drift

- Changes in data patterns, input distributions, or model behavior that may indicate a degradation in model performance over time.

- model performance

- The measure of a model’s accuracy, stability, and robustness in achieving its intended outcomes, which is regularly evaluated through monitoring after deployment to ensure ongoing reliability.

- ongoing monitoring

- A periodic report assessing the model’s performance and compliance over time, ensuring it remains valid under changing conditions.

- recalibrating models

- The process of adjusting a model to account for detected drift or changes in the underlying data or environment.

- reporting and governance

- The documentation of monitoring findings and communication to stakeholders to support decision-making and maintain transparency.

Design and implementation

The design of your ongoing monitoring plan overall should be a collaborative effort between the first line of defense, typically business units or model owners, and the second line of defense, namely your risk management team. Together, they should select the performance metrics, determine monitoring frequency, and tailor the ongoing monitoring plan to the model’s specific use case and risk profile.

This entails that the ongoing monitoring plan is primarily designed and implemented by the developers involved in the model’s development and deployment. Their work is then reviewed during model validation to ensure the robustness of the ongoing monitoring plan and alignment with risk management goals.

The implementation of the ongoing monitoring plan typically also falls on model developers. This effort includes executing the monitoring activities, collecting performance data, and generating reports. The model developers also ensure that the monitoring is carried out according to the established schedule and that any anomalies or deviations are promptly identified and addressed.

Testing

Model monitoring should incorporate a variety of tests to ensure ongoing accuracy and reliability.

Key test areas to pay attention to:

- Benchmarking — Compare model estimates with alternative estimates to validate their accuracy.

- Sensitivity testing — Reaffirm the model’s robustness and stability under different conditions.

- Analysis of overrides — Evaluate any adjustments made to the model to understand their impact.

- Parallel outcomes analysis — Assess whether new data should be included in the model’s calibration to enhance its performance.